Best Practices to Become a Data Engineer

Approaches to set up your environment, to learn useful programming languages, and to build pipelines.

Q: I come from a business intelligence background. I'm looking to make the transition to becoming a data engineer. How do I go about doing this? Do I need to learn NumPy? Do I need to learn Pandas? What are the key concepts that I need to understand in order to ramp up on data engineering quickly?

If you're doing business intelligence, maybe you're working data visualization tools such as Power BI or Tableau. Either way, you're doing a lot of analysis. At some point, you will be ready to ingest new data so that you can derive richer, deeper insights – you’re ready to start the journey to become a data engineer.

The following are the six main things that you need to do in order to get to the next level in your career as a future data engineer:

Step 1: Learn the Basics of SQL

If you have never done ANY programming before, then the first place that you want to start is by learning to sift through data. The way this is done is by learning a language called SQL (Structured Query Language). Among others things, SQL is a tool that can be used to look at relevant information in TABLES and to filter information with WHERE and SELECT statements.

GOOD RESOURCES TO GET STARTED WITH SQL

- khanacademy.org - Khan Academy has a good set of videos that goes through the basics of SQL. The videos cover how to select data, and how to join data together.

- Head First SQL – When I was first starting out, I definitely looked at one or two. Head First books published by O’Reilly. Head First covers the basics of a topic while focusing on different ways to engage the brain so that you learn the material quickly.

- SQL Cookbook - SQL Cookbook gives step by step instructions on ways to look at data ("recipes") and different ways to think about how to analyze data. The book helps someone form an intuition about how to approach data analysis.

- Google Cloud Reference Documentation for Big Query

I am a big fan of jumping into the deep end of the pool and learning how to swim quickly. Frankly, there's no better way to do this than Standard SQL with BigQuery in Google Cloud.

If you start your SQL journey with Big Query, then you are learning about Google Cloud technology while you are learning data analysis. If you are going this route, then a good place to start is to go through the BigQuery for Data Warehousing tutorial.

When you learn this Google version of SQL (Standard SQL), you will not only learn how to analyze data, but you will also learn how to make predictions on data. For example, you could use this flavor of SQL to predict sales with a linear regression. As another example, you could also use this language to do a basic prediction as to whether or not a customer will make a transaction with a logistic regression.

Step 2: Learn the Basics of Python

If you want to get to the next level in your career, it is almost essential to learn some type of programming language. Personally I would recommend learning Python. Python's flexible and it is relatively straight forward to learn. You can use to process streaming data with data pipelines, to analyze data with Jupyter notebooks, and to build artificial intelligence models. For me, it is one language that does a lot, and it is super flexible.

These are some top resources to learn Python, but they are not all free resources. In some cases, you “get what you pay for”, and paying for something may save you a lot of time in the long run.

BEST RESOURCES TO GET STARTED WITH PYTHON

- Learn Python 3 the Hard Way – White Owl Education has no affiliation with the author of “Learn Python 3 the Hard Way”, but when I was learning Python this one was one of the books that I used in order to ramp up.

- One note here - The book is very specific about what editor to use and how to get through the class. I would follow the instructions in the book to the letter without deviation.

- Official Python Tutorial - The official Python tutorial (which is part of the reference documentation) is actually pretty good, but it doesn't endorse any particular software for writing Python. Because of this, you're still better off using a book like "Learn Python the Hard Way" before jumping into the official tutorial.

- Official Python Documentation – Think of the official Python Documentation like a dictionary. You're not going to read this front to back, but it is definitely a reference that you may want to use from time to time.

Step 3: Learn How to Navigate Code Quickly

When you are developing in a programming language, it is helpful to write code in a program that can check for syntax errors, and that can quickly navigate through large amounts of code (large "code bases"). These programs that help you write code are called Integrated Development Environments, and the following are two of the most popular IDEs:

TOP DEVELOPMENT ENVIRONMENTS FOR PYTHON

- PyCharm: White Owl Education has a free tutorial on how to set up Pycharm on your laptop .

- Visual Studio Code: This is a lightweight integrated development environment. As a side note, colleagues of mine swear by VS Code for Python development, but I have only used VS code for React, JavaScript, and TypeScript development.

HOW MUCH PYTHON DO YOU NEED TO KNOW TO MASTER DATA ENGINEERING?

On this journey to becoming a data engineer, you need to master the basics of Python. How do you do this? Do a technique and then do that same technique again and again until it becomes intuitive. This is critical because as you start use Python to stream in data or to “do artificial intelligence,” you don't want to worry about very basic Python syntax mistakes.

So what do you need to know? You should be able to do the following in your sleep:

- Creation of a function

- Create a Python Class

- Understand control flow using ‘if’ and ‘for’

- Debugging with a logger

- Reading and writing from a file

- Creation of a Python module

- Create a basic unit test

- You should be able to use the requests package to pull data from an API.

Step 4: Learn the Basics of NumPy

As you start to analyze more and more data, you may start to group things together, you may covert these things into numbers (operate with numbers), and eventually you may start to apply math operations to these groups in order to make predictions about data. Pretty cool, right?

NumPy is a python package that efficiently helps you to make these changes. In addition, concepts from NumPy can be seen in a data analysis package called Pandas. Concepts from NumPy are also seen in a machine learning, and specifically they are seen in a machine learning framework from Google called TensorFlow. Long story short – if you are planning to do data analysis or machine learning, then sooner or later you will need to learn NumPy.

In order to better understand, consider the following NumPy example:

NumPy Example

In Python, this is a list:

a_list = [0, 1, 2, 3, 4, 5]This is just a list with 6 numbers (0 to 5). Here is the same code using NumPy:

import numpy as np

nd_array = np.arange(6)

This NumPy array ( "nd_array") also contains 6 numbers.

>>> nd_array

array([0, 1, 2, 3, 4, 5])

What if we now wanted take these numbers and put them in two groups of 3? How could we express that?

With NumPy, we only need the following line of code:

>>> B = np.reshape(nd_array, (-1, 3))

>>> B

array([[0, 1, 2],

[3, 4, 5]])

Let's break down this one line of code down into steps:

STEP 1: We're going to use the NumPy package (“np”).

STEP 2: Use a function within that package called reshape.

STEP 3: We're going to reshape the array, and we're going to put it into different groups ("dimensions").

- The (-1, 3) means to use "as many groups as possible" (the -1) where the group size is 3.

BEST BOOKS AND RESOURCES ON NUMPY

When you are starting out, you need to be able to do the following with NumPy:

- Installation of Numpy

- Understand how to determine the shape and size of an array

- Understand how to index and slice an array

The following resources can help with this:

- NumPy Installation documentation - This is another reference which gives a different approach on how to install NumPy.

- Python for Data Analysis - This book by Wes McKinney is a couple of years old, but it gives a really good walk through of NumPy and how to use it in an interactive Python environment called a Jupyter Notebook.

- Google Colabratory - If you are looking for a free resource to run Python, NumPy, and TensorFlow, you may want to try Google CoLab. This site allows you to run code using GPUs that work well with machine learning operations.

Step 5: Learn the Basics of Pandas

If you are making a transition to a career as a data engineer, then the manipulation of data and the cleaning of data are going to become extremely important. The first step in this journey may be to take a subset of data, and to work with Pandas (a Python package which is “Excel on steroids”) in order to really understand the data.

In order to better understand, consider the following Pandas example:

Pandas Example

If you want to follow along, check out the corresponding Google Colab Notebook

In this example we are going to read from a comma separated file (csv). This file will contain 4 names. In Unix, we use the echo statement to create the file.

# ingestdata.sh

echo "category,name" >> customers.csv

echo "A, Ralph Brooks" >> customers.csv

echo "B, John Doe" >> customers.csv

echo "B, Jane Doe" >> customers.csv

Pandas is used to read in this file.

df = pd.read_csv("customers.csv")

This creates a dataframe (df in the above example) which is an Excel-like grid of data whch contains category and name (the first and last name).

Now we are going to create a function that is going to do the following:

- Take a name and split it by the space character into two components

- Look at the first component - the first_name

- If the name_list has two parts (a first name and a last name), return back the first name

- Return nothing, if you can't identify a first name and a last name

def get_first_name(full_name):

name_list = full_name.split()

first_name = name_list[0]

if len(name_list) == 2:

# if the list has two elements, then there is a first name and a last name

return first_name

else:

return ""

We then extract out only names from the dataframe into a Python list.

.

names = df.name.to_list()

Then we map the get_first_name function to our list of names.

>>> first_names = list(map(get_first_name, names))

>>> first_names

['Ralph', 'John', 'Jane']

Pretty powerful stuff. We just used the pandas library to read in data and to process the name part of that data.

When you are starting out with data analysis with Pandas, you want to make sure that you take the time to master the following:

- Read data from a comma separated file into a DataFrame

- Select only those rows in a dataframe which have a certain value

In the Pandas Example that we covered, you should be able to create a subset of our dataframe which only contains the second category (a dataframe that only contains category B).

- Merge two dataframes together based on a common key using the merge function

BEST BOOKS AND RESOURCES ON PANDAS

- Pandas Tutorial - At this point in your programming journey, you really want to get good at looking at open source documentation, and moving through the relevant parts of that documentation quickly. With regards to Pandas, take a look at the tutorial, and then take a look at the documentation on an "as needed basis."

Step 6: Learn How to Build Data Pipelines

Congrats for making it this far in the blog. At this point, you know that you at least have some homework that you are going to need to do on SQL, Python, PyCharm, NumPy, and Pandas. The payoff though is that once you have got a basic handle on these different technologies, you are ready to combine them together to PULL DATA into your analysis. It is the difference between "working with the data you have" to "working with the data that you need."

Data engineering is a discipline unto itself, but the basics here are to:

- Pull data from a source (such as using the Twitter API to pull in Tweets)

- Cleaning data (so that bad punctuation or bad data does not effect other processing that you do "downstream")

- Place clean data in a different source - An example here would be to pull in streaming data, clean the data in a pipeline, and then export that data into BigQuery on Google Cloud for further processing.

BEST ONLINE COURSES AND RESOURCES ON BUILDING DATA PIPELINES

- Machine Learning Mastery - The Machine Learning Mastery course from White Owl Education not only covers setup of Python and installation of packages (including NumPy and TensorFlow). It also shows how to set up a data pipeline that can read streaming information and that can process streaming data with machine learning.

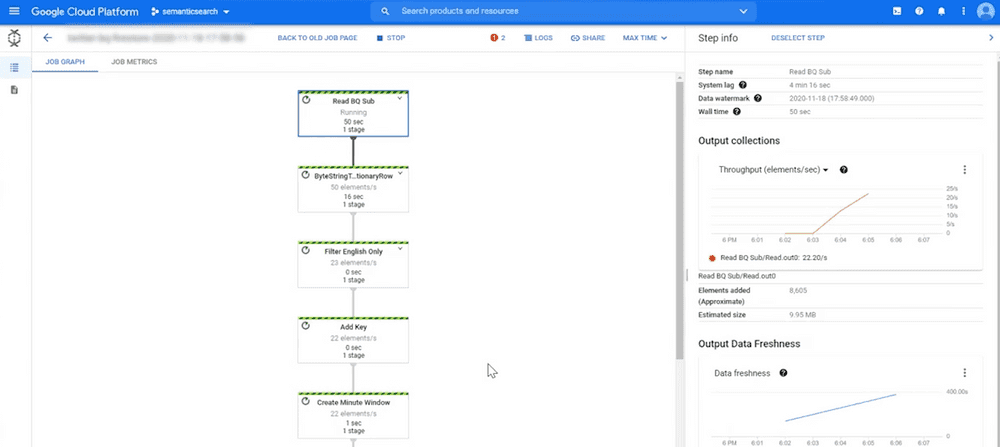

- Apache Beam - Apache Beam is a scalable framework that allows you to implement batch and streaming data processing jobs. It is a framework that you can use in order to create a data pipeline on Google Cloud or on Amazon Web Services .

Summary

In this blog post, you learned about the 6 main steps that are needed in order to take your data analysis to the next level. These steps are:

- Learn the Basics of SQL

- Learn the Basics of Python

- Learn How to Navigate Code Quickly

- Learn the Basics of NumPy

- Learn the Basics of Pandas

- Learn How to Build Data Pipelines